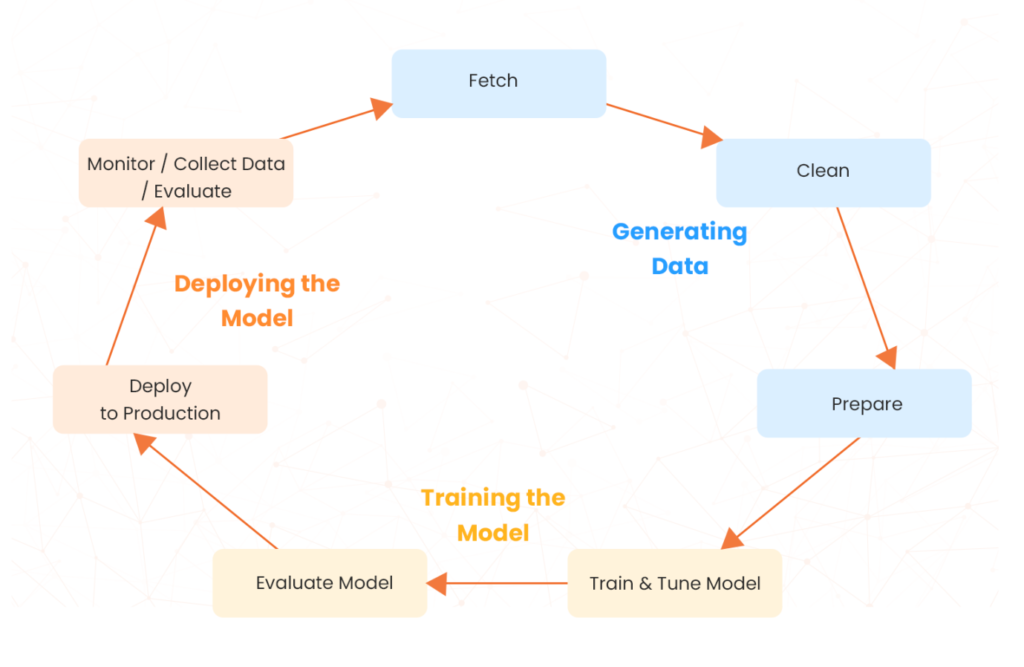

The machine learning lifecycle is a cyclical and iterative process of continuous data ingestion and model updates, which can create complex security risks. Complying to recommended security practices at every stage of the ML workflow is crucial to ensure the security of ML applications. This blog post discusses the AWS best practices for securing ML models based on the course AWS Certified Machine Learning Associate (MLA-C01). Security is a shared responsibility between AWS and the customer, and AWS Well-Architected Framework provides architectural best practices for designing secure ML workloads on AWS. These best practices must include ML-specific security along with traditional software development security.

Security as a Focused Area in AWS Certified Machine Learning Associate

Different teams/people work in various phases of the ML lifecycle; however, not all team members are security experts. Maintaining security in all phases is everyone’s job, so members must know ML-specific threats and vulnerabilities and how to make the right decision that meets security requirements.

With a prerequisite of 1 year of experience using Amazon SageMaker and other AWS services, AWS Certified Machine Learning Associate is an intermediate-level certification, positioned between foundational and specialty levels. The target candidates for MLA-C01 are backend software developers, DevOps developers, data engineers, or data scientists. Security is a crucial component of this exam, and Domain 4: Solution Monitoring, Maintenance, and Security (24%) covers the security best practices, which are aligned with the design principles of the Security Pillar in the AWS Well-Architected Framework. A candidate applies these guidelines—regardless of their role in an ML environment, to ensure secure ML workloads.

AWS Security Best Practices

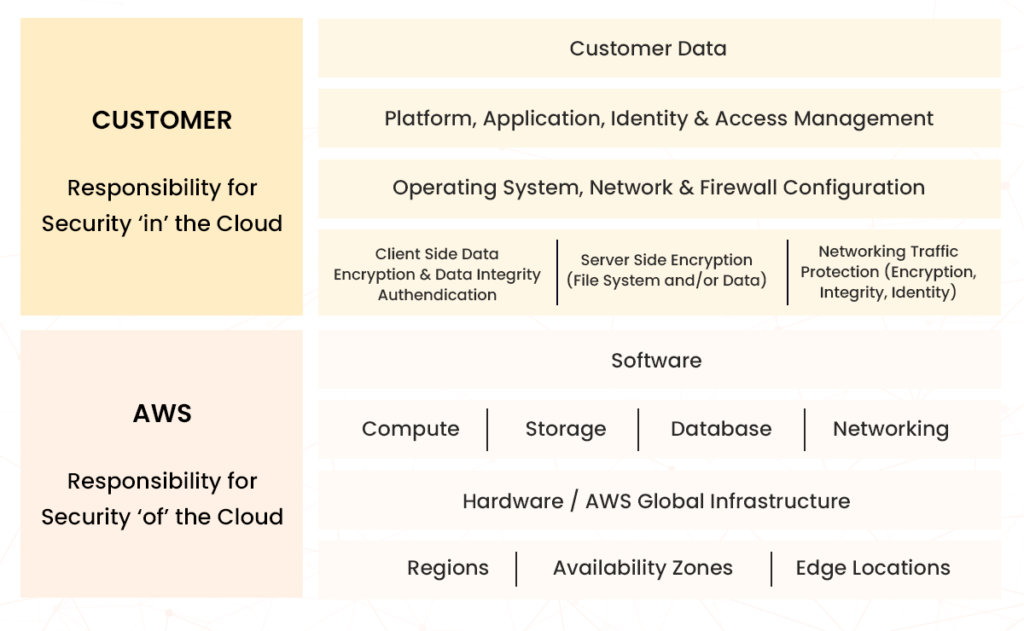

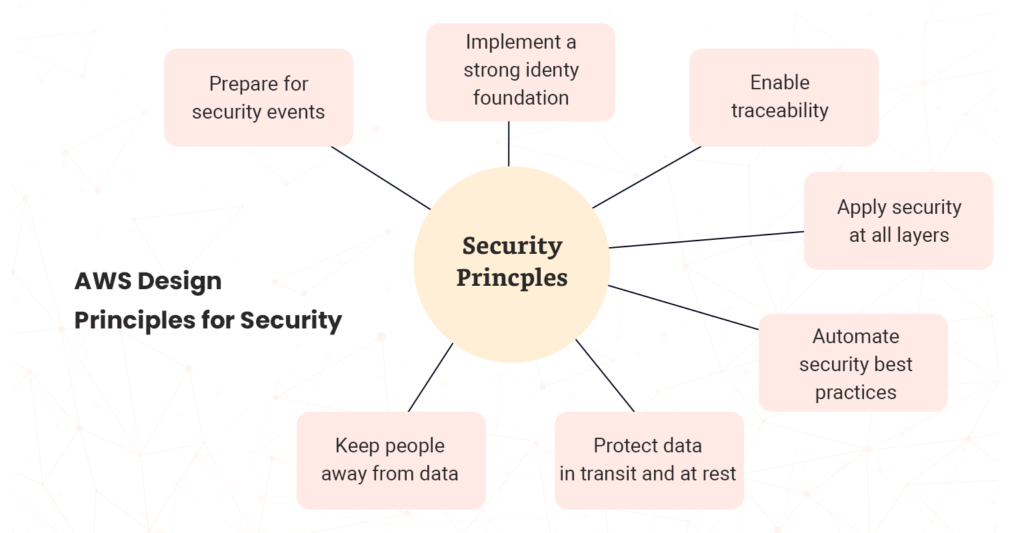

Security refers to the measures that protect ML deployments from vulnerabilities, threats, and misuse. However, security is a shared responsibility between the customer and AWS, where customers are responsible for “security in the cloud” and AWS is responsible for “security of the cloud.” The key practices are for services and the infrastructure that runs the AWS cloud. Based on the AWS recommended design principles for security, AWS follows the defense-in-depth methodology to enable multiple security controls to all layers of the AWS infrastructure.

You can have a secure ML environment by following these security principles across the ML lifecycle.

AWS offers many services and tools to protect different phases of this lifecycle, based on the customer’s specific industry requirements. AWS SageMaker, the center of AWS machine learning services, comes with inherent security features and seamlessly integrates with other AWS security services for enhanced protection.

Secure AWS Resources

Resources in AWS include data, algorithms, code, hyperparameters, trained model artifacts, and infrastructure. Protect all resources across various phases of the ML lifecycle using the following methods:

Least Privilege Access

Least privilege access is the security principle for granting only the permissions required to complete a task.

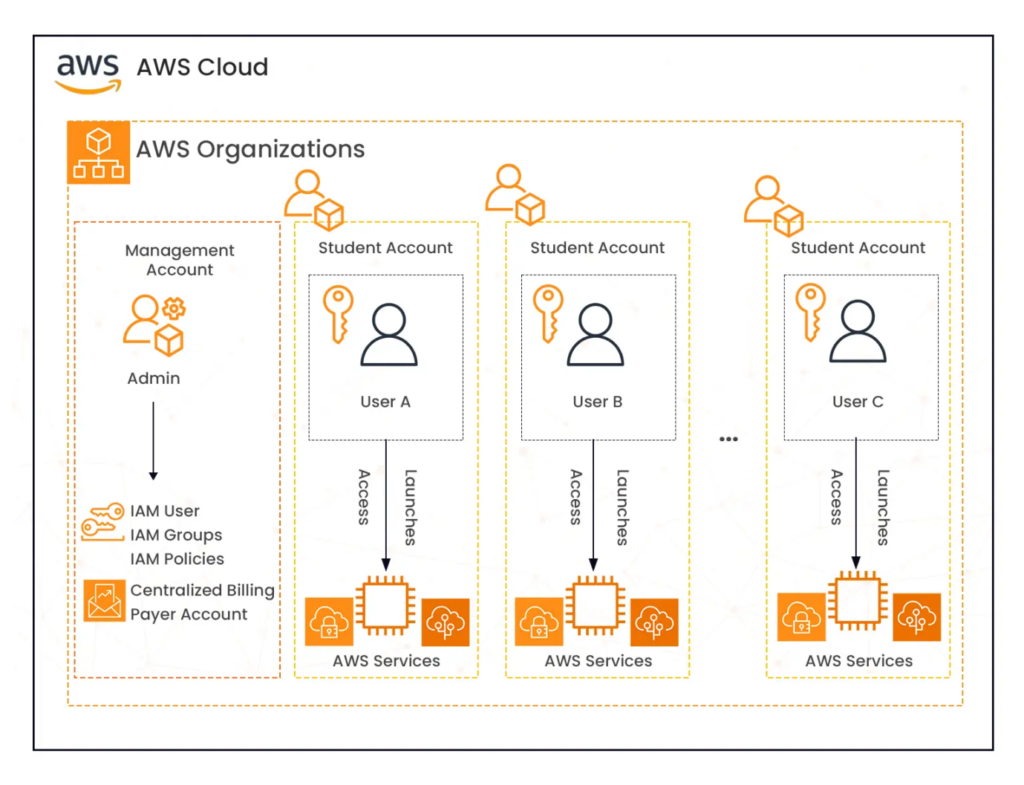

- Use AWS Organizations for a multi-account architecture to segregate workloads and centrally manage all accounts mapped to one organization. Apply service control policies (SCPs) to define permission boundaries for member accounts in the organization.

Authentication and Authorization

Use AWS Identity and Access Management (AWS IAM) for unified authentication and authorization across all AWS services to enforce the least privilege. Here are some AWS IAM features that help:

- IAM Users: verifies who is accessing AWS

- IAM Policies: authenticate user only for permitted actions

- IAM User Roles: provides temporary permissions to IAM users

- IAM Service Roles: allows AWS services to authenticate

Enable Data Encryption

Data protection enables the confidentiality and integrity of your ML workload data. AWS data protection services provide encryption capabilities, key management, and sensitive data discovery.

- AWS Key Management Service: creates and manages cryptographic keys from a central location.

- AWS Secrets Manager: securely stores, manages, and retrieves database credentials.

- Amazon Macie: discovers sensitive data and verifies that it is not publicly shared or exposed.

- Amazon GuardDuty: monitors data for malicious activity and unauthorized behavior.

Network Isolation

The building block of a well-governed and secure ML workflow is a private and isolated compute and network environment. You can isolate ML resources from other sensitive systems using the following AWS services to improve security and compliance.

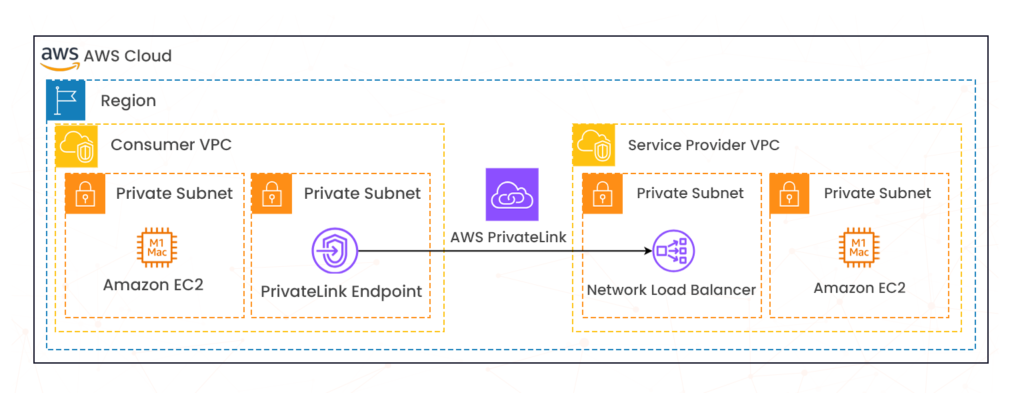

- Amazon Virtual Private Cloud (VPC): logically isolates areas in AWS cloud. Use separate VPCs to isolate infrastructure by workload or organizational entity and subnets to isolate the tiers of your application within a single VPC.

- AWS PrivateLink: provides private connectivity between VPCs and AWS services, bypassing the public internet.

- Amazon SageMaker: uses VPC and all data between various SageMaker components flows within this network. You can control SageMaker network traffic using PrivateLink.

- AWS Direct Connect: provides a dedicated network connection between AWS resources and the customer’s on-premises infrastructure.

AWS Machine Learning Governance

Model governance is a framework that gives systematic visibility into model development, validation, and usage. A safe ML environment goes beyond just security; it must also incorporate governance for responsible ML deployment. A well-implemented model governance framework should minimize the number of interfaces required to view, track, and manage lifecycle tasks to make it easier to monitor the ML lifecycle at scale.

Enforce ML Lineage

ML lineage is the ability to track how and why a model has been developed. The lineage includes three components: code, data, and model. AWS offers different services to maintain ML lineage, showing its origin, movement, and transformations of these components.

- Use AWS Glue, a fully managed extract, transform, and load (ETL) service, to maintain data lineage.

- Amazon SageMaker Lineage Tracking creates and stores information about the steps of an ML workflow from data preparation to model deployment.

- The Amazon SageMaker Model Registry stores models and model versions in a centralized repository.

- AWS CodeCommit is the Amazon fully managed source control service.

- AWS CodeBuild maintains a history of build executions, enabling you to trace which code changes correspond to specific builds.

- AWS CodeDeploy keeps records of deployment activities, assisting in tracking which versions of code are running in specific environments.

Detect Bias

Responsible ML requires bias detection in data and model outcomes. Amazon SageMaker Clarify detects potential bias during data preparation, after model training, and in your deployed model by examining the attributes you specify.

Monitoring and Auditing

Monitoring and Auditing provide capabilities to track, monitor, and log activities in your ML environment. After the ML model is deployed, you must monitor and audit it to ensure the model’s performance meets the ML goals: business, security, and compliance. AWS offers many tools and services to automate monitoring and auditing.

- SageMaker Model Monitor: detects data drift and anomalies.

- AWS Config: provides access to the historical configurations of your resources.

- CloudWatch Logs: collects metrics, logs, and events from AWS resources.

- AWS CloudTrail: tracks user activity and API calls.

AWS Cloud Compliance

Compliance is the regulatory and ethical requirements specific to industries that ML systems must meet to maintain security and data protection. AWS supports 143 security standards and compliance certifications, & some key compliance programs include PCI-DSS, HIPAA/HITECH, FedRAMP, GDPR, FIPS 140-2, and NIST 800-171. For information, check AWS Compliance Programs. To learn whether an AWS service is within the scope of specific compliance programs, see AWS services in Scope by Compliance Program and choose the compliance program that you are interested in.

Conclusion

ML development is a non-linear, cyclical process, making security a continuous, ongoing effort. With multiple teams, tools, and frameworks, ML workflows are complex environments. Working with them requires core understanding which is essential for passing the AWS Certified Machine Learning Associate (MLA-C01) exam.